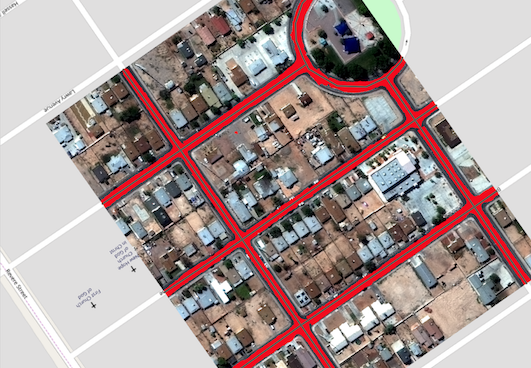

meters) than exists in the OpenStreetMap baselayer. UrChn allows users to superimpose a grid over urban zones where hot colored squares indicate areas that the model predicts a larger building footprint (in sq. We’ve deployed this in our UrChn tool to track Urban Change.

Red pixels are inside a building boundary while blue are outside and color intensity indicates absolute distance from the border. Pixels are colored based on the signed distance transform, which encodes their distance from a building border. The animation shows progressive improvement from early to late training. Using RGB satellite images (left) and true building locations (middle), we train an algorithm to recognize which pixels belong to a building. Process of training a building segmentation algorithm. We also used tweaked the model’s prediction task - instead of predicting if each pixel belonged to a building or not, we applied the signed distance transform using scikit-fmm (as shown in the following figure). Our aim here was to train a single broadly-applicable model trained that will perform well in many areas of the world. Specifically, we combined data from Las Vegas, Paris, Shanghai, and Khartoum. We trained the model using RGB images from the SpaceNet Challenge dataset, which draws from a broad set of regions. A number of other groups have generated positive building mapping results including SpaceNet Challenge participants, Azavea, Mapbox, Microsoft, and Ecopia. We chose DeepLabV3+ because it is relatively powerful (making use of developments like depthwise separable convolution, atrous spatial pooling, and an Xception backbone) and has been a top performer on competition datasets including PASCALVOC and Cityscapes. Like other segmentation models, it’s designed to make a prediction on whether each pixel is part of a building. We’re testing an approach that uses Google’s DeepLabV3+ segmentation algorithm. Our current building prediction tool is somewhere in the low to medium precision range. This is the ultimate goal for automated mapping algorithms. These pixel segmentation maps can be vectorized (i.e., traced) autonomously, validated by a human, and then imported into an existing map (e.g., OpenStreetMap).

Toward this goal, we’re working on machine learning (ML) tools to recognize buildings and speed up the urban mapping process.Īutonomously mapping buildings remains an area of active research as human-level precision is very difficult to attain. With complete maps, urban planners can more effectively improve infrastructure including public transit, roads, and waste management.

One component of achieving this goal is tied to accurate maps of buildings. The high rate of urbanization is a major challenge for keeping cities safe, resilient, and sustainable - the aim of the UN’s Sustainable Development Goal 11. Over half the world’s population lives in urban areas, many in unplanned urban sprawl.

0 kommentar(er)

0 kommentar(er)